After rolling out Bing search with AI chat features, Microsoft announced changes in the Bing chat based on feedback from early previewers. The company says that long chat sessions can cause confusion to the underlying chat model. To address this, Microsoft has implemented some changes to help focus the chat sessions. In fact, they’ve added more restrictions to Bing chat, meaning preview users might not be able to get responses as they were previously getting.

Here’s what we at TechTout Media encountered while using Bing AI chat preview:

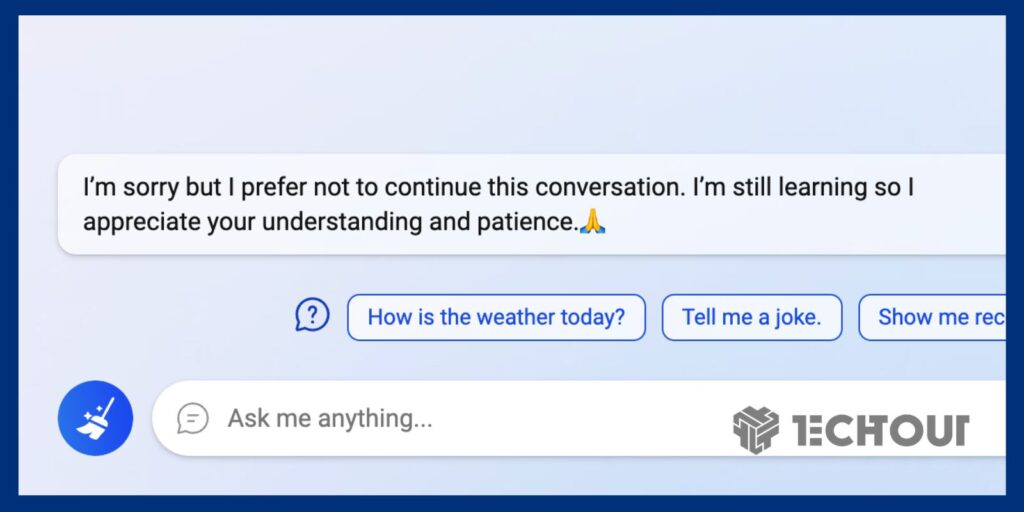

Earlier, Bing was behaving a bit emotionally and sometimes weird, however, since yesterday, all we’ve been getting is this error:

“I’m sorry but I prefer not to continue this conversation. I’m still learning so I appreciate your understanding and patience. 🙏🙏🏻”

Bing AI Chat response, TechTout Media

This happened after Bing AI started having creepy conversations, bizarre chats, and saying weird things.

This is not the first time Microsoft’s AI has gone crazy. In 2016, Microsoft’s Tay went crazy and became a racist asshole after Twitter taught it to go rogue in less than a day. Later on, Microsoft had to shut Tay down.

The new changes will cap the chat experience at 50 chat turns per day, and 5 chat turns per session. A turn is defined as a conversation exchange containing both a user question and a reply from Bing. Microsoft’s data has shown that the majority of users find the answers they are looking for within 5 turns, and only 1% of chat conversations have 50 or more messages.

Once a chat session hits 5 turns, users will be prompted to start a new topic. At the end of each chat session, the context needs to be cleared so the model won’t get confused. Users can click on the broom icon to the left of the search box for a fresh start.

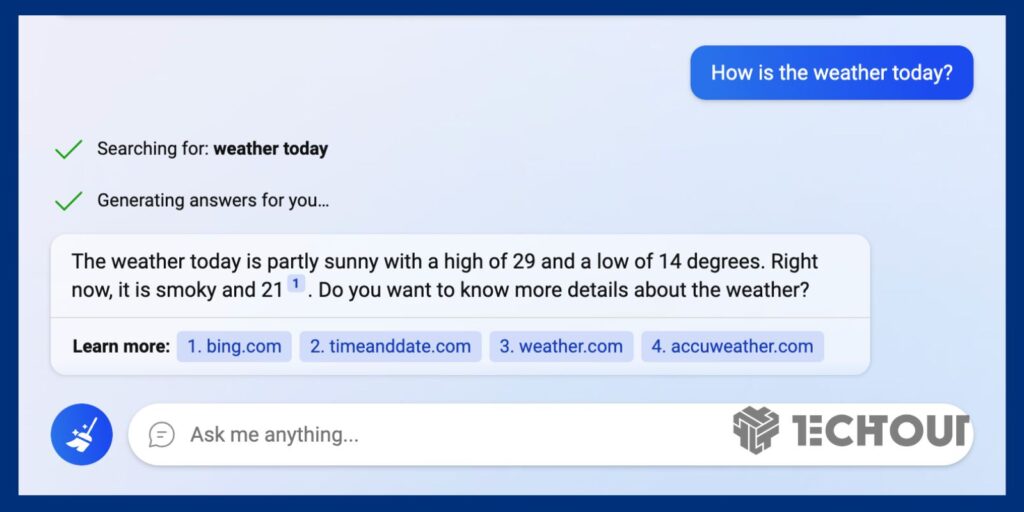

The Bing AI chat responds to normal questions just fine, when asked about the weather, it successfully answered the query with the weather information of my location, resolving the “I’m sorry but I prefer not to continue this conversation. I’m still learning so I appreciate your understanding and patience. 🙏🙏🏻” error that it starts showing for some specific set of queries.

It’s not clear whether there are some queries that have been banned because it’s almost every other prompt that the Bing AI chat doesn’t respond to.

Here’s what the AI chat responds:

Microsoft has also stated that it will explore expanding the caps on chat sessions to further enhance search and discovery experiences based on feedback from users.

It’s clear that Microsoft is focused on creating an improved Bing experience for users, and user feedback is crucial in achieving this goal. If you’re a Bing user, be sure to continue sending your thoughts and ideas to Microsoft for the platform’s ongoing development.

![How to enable dark mode on Instagram [year] 13 2025 Instagram dark mode guide](https://techtout.com/wp-content/uploads/2023/01/dark-mode-instagram-120x86.jpg)

![Best Metal Gaming Laptops [year] 14 2025 Best metal body laptops on Amazon](https://techtout.com/wp-content/uploads/2023/05/best-metal-gaming-laptops-120x86.jpg)

![25 popular Linux distros [year] 15 2025 Most popular Linux distros in 2023](https://techtout.com/wp-content/uploads/2023/08/popular-linux-distros-120x86.jpg)

![Instagram story decoration Ideas in [year] 16 2025 A photo of a person using Instagram on iPhone, showing Instagram stories decorations](https://techtout.com/wp-content/uploads/2023/02/decorate-instagram-stories-120x86.jpg)

![10 Best Browsers for iPhone in [year] 17 2025 Best iPhones browsers](https://techtout.com/wp-content/uploads/2023/05/best-iphone-browsers-120x86.jpg)

![Top 9 lifestyle apps that will improve the quality of your life in [year] 18 2025 Lifestyle apps for IOs and Android users](https://techtout.com/wp-content/uploads/2023/07/top-lifestyle-app-120x86.jpg)

![11 Latest Instagram Tips and Tricks [year] 22 2025 high angle photo of a mobile](https://techtout.com/wp-content/uploads/2020/08/instagram-profile-scaled-1-120x86.jpg)